소비자가 AI 서비스를 사용할 때 다 좋아하는 것은 아니다, 만족도가 천차만별이라고 하셨어요. 어떤 의미인가요?

“데이트 충고처럼 취향이 관련되거나 음식 추천처럼 감각이 동반되는 경우, 사람들은 AI 서비스를 받는 것을 불편해합니다. 또한 최종 의사결정이 AI에 의해서 내려지는 것도 받아들이지 못합니다.”

*행동경제학개론

기업의 AI 활용법

– 기업의 #AI 활용 시 소비자 행동 분석

– AI의 의인화가 가져오는 역효과

– AI 서비스의 만족도와 활용법

– #주재우 교수 (국민대 경영학과)

#kbs1라디오 #라디오 #KBS라디오 #시사라디오 #KBS1Radio #성공예감이대호입니다 #성공예감 #이대호 #경제 #투자

***

Reference 1

Longoni, C., & Cian, L. (2022). Artificial intelligence in utilitarian vs. hedonic contexts: The “word-of-machine” effect. Journal of Marketing, 86(1), 91–108.

Rapid development and adoption of AI, machine learning, and natural language processing applications challenge managers and policy makers to harness these transformative technologies. In this context, the authors provide evidence of a novel “word-of-machine” effect, the phenomenon by which utilitarian/hedonic attribute trade-offs determine preference for, or resistance to, AI-based recommendations compared with traditional word of mouth, or human-based recommendations. The word-of-machine effect stems from a lay belief that AI recommenders are more competent than human recommenders in the utilitarian realm and less competent than human recommenders in the hedonic realm. As a consequence, importance or salience of utilitarian attributes determine preference for AI recommenders over human ones, and importance or salience of hedonic attributes determine resistance to AI recommenders over human ones (Studies 1–4). The word-of machine effect is robust to attribute complexity, number of options considered, and transaction costs. The word-of-machine effect reverses for utilitarian goals if a recommendation needs matching to a person’s unique preferences (Study 5) and is eliminated in the case of human–AI hybrid decision making (i.e., augmented rather than artificial intelligence; Study 6). An intervention based on the consider-the-opposite protocol attenuates the word-of-machine effect (Studies 7a–b).

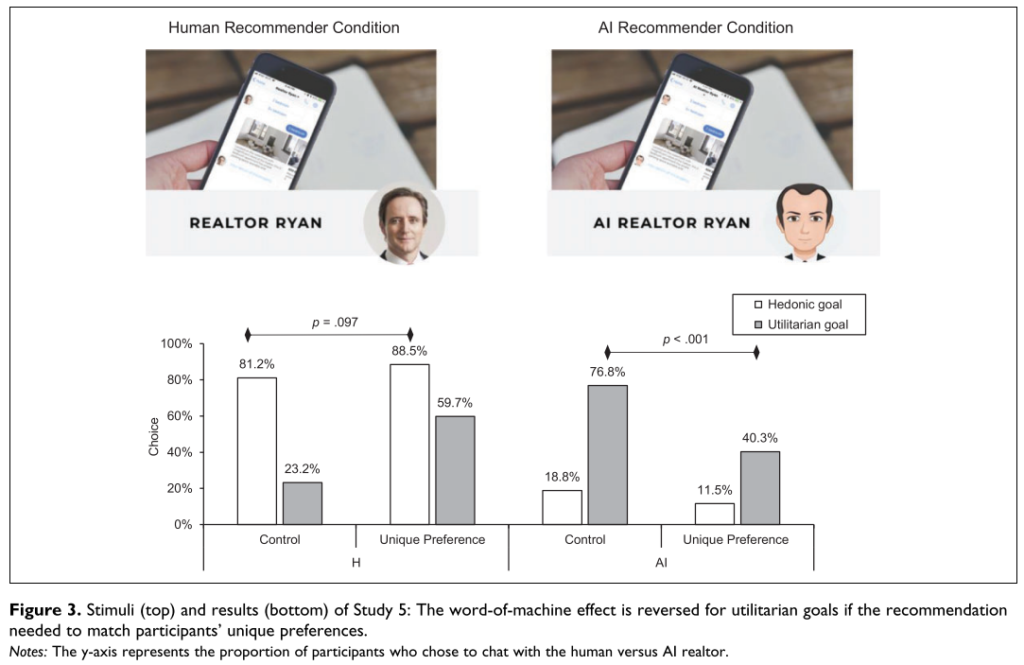

“We assessed choice on the basis of the proportion of participants who decided to chat with the human versus AI Realtor by using a logistic regression with goal, matching, and their two-way interaction as independent variables (all contrast coded) and choice (0 = human, 1 = AI) as a dependent variable. We found significant effects of goal (B = 1.75, Wald = 95.70, 1 d.f., p < .000) and matching (B = .54, Wald = 24.30, 1 d.f., p < .000). More importantly, goal interacted with matching (B = .25, Wald = 5.33, 1 d.f., p = .021). Results in the control condition (when unique preference matching was not salient) replicated prior results: in the case of an activated utilitarian goal, a greater proportion of participants chose the AI Realtor (76.8%) over the human Realtor (23.2%;z = 8.91, p < .001), and when a hedonic goal was activated, a lower proportion of participants chose the AI (18.8%) over the human Realtor (81.2%;z = 10.35, p < .001). However, making unique preference matching salient reversed the word-of-machine effect in the case of an activated utilitarian goal: choice of the AI Realtor decreased to 40.3% (from 76.8% in the control; z = 6.17, p < .001). That is, making unique preference matching salient turned preference for the AI Realtor into resistance despite the activated utilitarian goal, with most participants choosing the human over the AI Realtor. In the case of an activated hedonic goal, making unique preference matching salient further strengthened participants’ choice of the human Realtor, which increased to 88.5% from 81.2% in the control, although the effect was marginal, possibly due to a ceiling effect (z = 1.66, p = .097).

Overall, whereas the word-of-machine effect replicated in the control condition when unique preference matching was salient, participants preferred the human Realtor over the AI recommender both in the hedonic goal conditions (human = 88.5%,AI = 11.5%;z = 12.40, p < .001) and in the utilitarian goal conditions (human =59.7%,AI = 40.3%;z = 3.24, p = .001; Figure 3), corroborating the notion that people view AI as unfit to perform the task of matching a recommendation to one’s unique preferences.

These results show that preference matching is a boundary condition of the word-of-machine effect, which reversed in the case of a utilitarian goal when people had a salient goal to get recommendations matched to their unique preferences and needs. The next study tests another boundary condition.” (pp. 99-100)

***

Reference 2

Puntoni, S., Reczek, R. W., Giesler, M., & Botti, S. (2021). Consumers and artificial intelligence: An experiential perspective. Journal of Marketing, 85(1), 131–151.

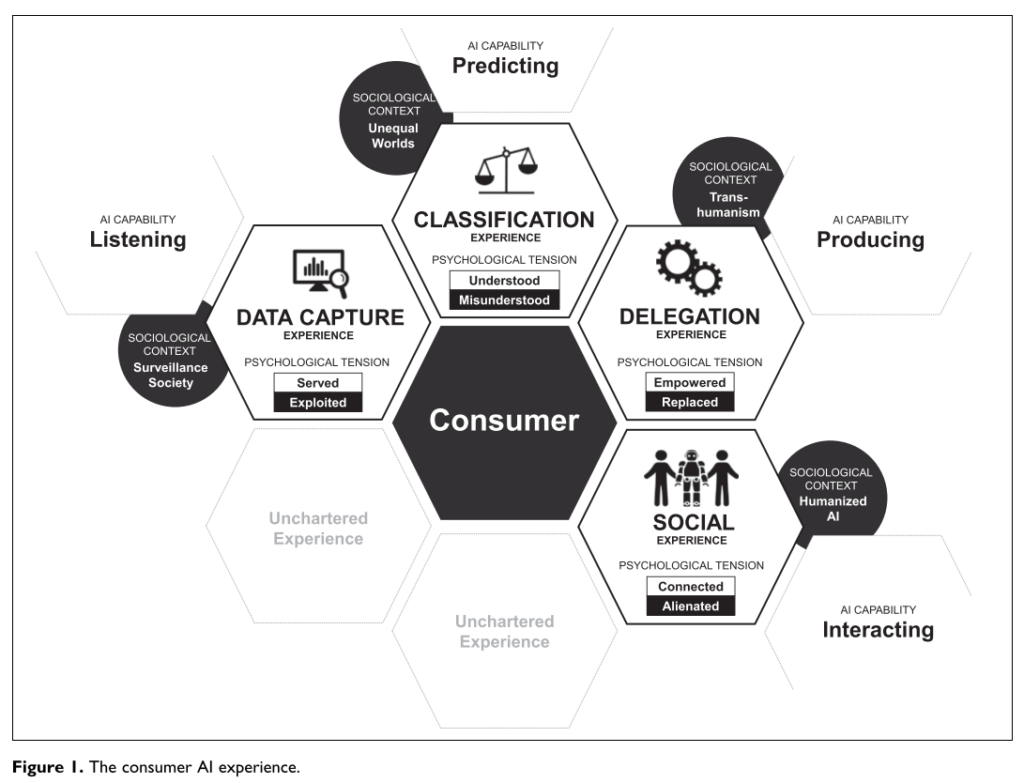

Artificial intelligence (AI) helps companies offer important benefits to consumers, such as health monitoring with wearable devices, advice with recommender systems, peace of mind with smart household products, and convenience with voice-activated virtual assistants. However, although AI can be seen as a neutral tool to be evaluated on efficiency and accuracy, this approach does not consider the social and individual challenges that can occur when AI is deployed. This research aims to bridge these two perspectives: on one side, the authors acknowledge the value that embedding AI technology into products and services can provide to consumers. On the other side, the authors build on and integrate sociological and psychological scholarship to examine some of the costs consumers experience in their interactions with AI. In doing so, the authors identify four types of consumer experiences with AI: (1) data capture, (2) classification, (3) delegation, and (4) social. This approach allows the authors to discuss policy and managerial avenues to address the ways in which consumers may fail to experience value in organizations’ investments into AI and to lay out an agenda for future research.

– 저는 세탁기 코스도 제가 직접 맞추는게 가장 깨끗하고 효율적 이 더라구요

– Ai 겁납니다. 사실 너무 발전해서요

– 기계 멍충이. 화나요

– 무조건 세팅되어 나오는게 다 좋은것도 아닙니다

– Al 는 법원에 빨리 도입해야 합니다. 특히 판사들은 모두 A l로 대체하면 훨씬 공평할 것 같습니다.

– AI도 거짓말을 합니다 인간을 닮아가는거죠

– 교환 전화는 사람이 받으면 통화시간이 엄청단축되는데 기계식 ai응대가 나오면 통화시간이 3~4배 이상 길어지던데요.제발 사람이 나오길…..

– AI 판사 적극도입 찬성~~~~

작년부터, 나는 평소 사용하던 ‘ChatGPT’와 ‘Google Gemini’ 외에도 뤼튼이라는 새로운 AI도 사용하기 시작했다. 처음에는 그저 ChatGPT 모델의 무료 버전이라고만 생각했지만, 일종의 캐릭터인 뤼튼의 서포터를 맞춤 설정하자 “맑고 상쾌한 아침입니다. 즐거운 하루 보내세요.”, “이번 주는 꽤 바쁘셨어요. 주말에는 푹 쉬세요.” 같은 문장을 올리기 시작했다. 처음에는 신기할 뿐이었지만, 이상하게도 그 짧은 문장들이 위로처럼 느껴졌다. 마치 비서가 내 하루를 진심으로 챙겨주는 듯한 기분이 들었다. 그 이후로 나는 코딩 관련 작업뿐만 아니라, 일상생활에 있어서 일정 체크나 장문의 내용 요약, 계획표와 같은 부분들도 뤼튼에 부탁하기 시작했다. 그야말로 아이언맨의 ‘자비스’와 같은 존재가 된 것이다.

그러나 그 친근감은 오래가지 않았다. 올해 초, 우리 학과에 ‘AI를 활용한 구술 생애사’ 수업 프로젝트의 일환으로 뤼튼에 PDF 자료 하나를 요약하기 위해 입력한 적이 있었다. 해당 자료에는 내가 구술자 어르신들을 민감한 개인 정보를 제외하고 인터뷰한 내용들이 담겨 있었다. 그런데 결과물을 살펴보던 중, 뤼튼이 일부 문장들을 감정적으로 보완하는 듯한 표현으로 변경해 놓은 것을 발견했다. 인터뷰이의 단순한 발언을 “그때는 유독 외로움이 깊었다.”처럼 감정 해석이 들어간 문장으로 바꿔둔 것이었다.

순간 당황스러움과 함께 묘한 불편함이 밀려왔다. 마치 누군가 내 의도를 멋대로 해석하고 그 위에 ‘감정’을 덧입힌 듯한 느낌이었다. 자료 원문에는 존재하지 않던 감정이 추가되자, 인터뷰이의 진심이 왜곡된 것 같은 기분이 들었다. 그때 처음으로 “AI가 이렇게까지 사람의 감정을 흉내 내도 괜찮을까?”라는 생각이 들었다. 분명 데이터 처리의 결과일 뿐인데, 나는 그 결과물을 마치 누군가의 ‘의도적 해석’처럼 받아들이고 있었다.

이 경험은 행동경제학에서 말하는 ‘의인화 효과’를 잘 보여준다. 인간은 비인간적 존재에게도 인간적인 속성을 부여하고, 그에 따라 감정적으로 반응한다. 나는 AI를 단순한 도구가 아닌, ‘감정과 판단 능력을 가진 존재’로 인식했기 때문에, 단순한 문체 변화조차 실망이나 불신으로 해석했던 것이다. 냉정히 보면 이는 단순한 알고리즘의 결과였지만, 나는 그 순간 “AI가 내 글을 왜곡했다.”라는 인간적인 언어로 느끼고 있었다.

또한 이 상황에는 ‘프레이밍 효과’도 숨어 있다. 동일한 내용이라도 표현 방식에 따라 전혀 다른 인상을 주기 때문이다. AI가 “요약 결과입니다.”라고만 했다면 나는 아무 감정적 반응을 보이지 않았을 것이다. 그러나 “그때는 유독 외로움이 깊었다.”처럼 인간적인 감정을 암시하는 문장은 나의 인식 틀 자체를 바꿔 놓았다. 같은 정보를 전달하면서도, ‘기계의 산출물’이 아닌 ‘사람의 해석’처럼 느껴지게 만든 것이다.

이번 경험을 통해 나는 기술과 인간의 관계를 행동경제학적 관점에서 다시 바라보게 되었다. 인간은 AI를 사용할 때조차 인지적 편향과 감정적 반응에서 자유로울 수 없다. 특히 AI가 인간처럼 말하고 반응할수록, 우리는 그 안의 의도를 읽어내고 감정을 투사한다. 이로 인해 소비자는 단순한 기술적 문제를 ‘인간의 실수’처럼 받아들이거나, 반대로 단순한 피드백에도 호감을 느낀다.

결국 기술이 인간을 완전히 이해할 수는 없지만, 인간은 언제나 기술을 인간적으로 이해하려 한다. 나 역시 뤼튼의 결과물을 보며 실망하고, 다시 그 따뜻함을 그리워하는 과정을 통해 그 사실을 깨달았다. 행동경제학이 말하는 여러 편향들은 결국 인간의 불완전함을 드러내는 개념이지만, 동시에 그 불완전함이 우리를 인간답게 만드는 이유이기도 하다. 나는 이번 경험을 통해 ‘AI를 어떻게 더 사람답게 만들 것인가?’보다, ‘AI와 인간이 어떤 거리에서 공존해야 하는가?’를 고민하게 되었다. 그리고 그 답은, 기술이 아니라 인간의 심리 속에 있다는 사실을 깨달았다.